DAG File Processing¶

DAG File Processing refers to the process of turning Python files contained in the DAGs folder into DAG objects that contain tasks to be scheduled.

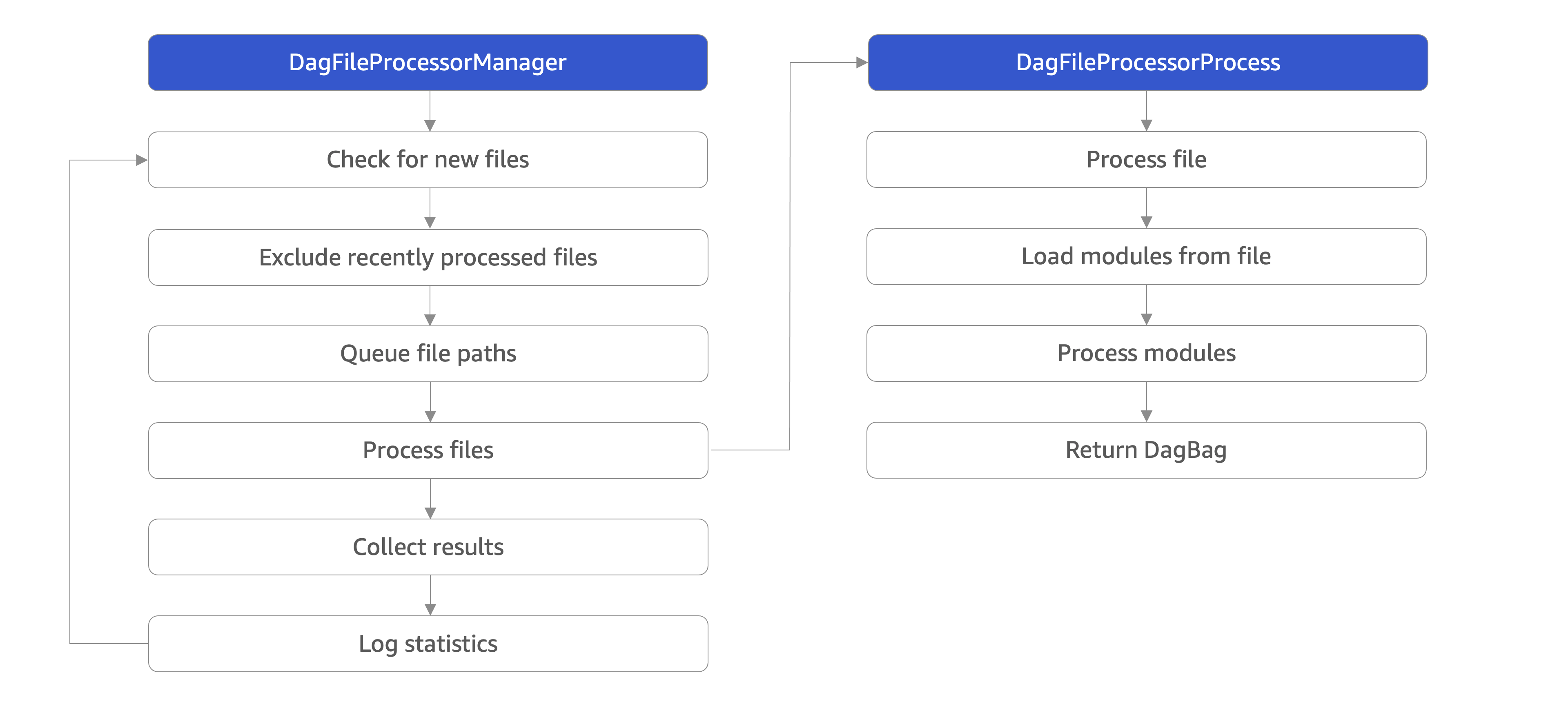

There are two primary components involved in DAG file processing. The DagFileProcessorManager is a process executing an infinite loop that determines which files need

to be processed, and the DagFileProcessorProcess is a separate process that is started to convert an individual file into one or more DAG objects.

The DagFileProcessorManager runs user codes. As a result, you can decide to run it as a standalone process in a different host than the scheduler process.

If you decide to run it as a standalone process, you need to set this configuration: AIRFLOW__SCHEDULER__STANDALONE_DAG_PROCESSOR=True and

run the airflow dag-processor CLI command, otherwise, starting the scheduler process (airflow scheduler) also starts the DagFileProcessorManager.

DagFileProcessorManager has the following steps:

Check for new files: If the elapsed time since the DAG was last refreshed is > dag_dir_list_interval then update the file paths list

Exclude recently processed files: Exclude files that have been processed more recently than min_file_process_interval and have not been modified

Queue file paths: Add files discovered to the file path queue

Process files: Start a new

DagFileProcessorProcessfor each file, up to a maximum of parsing_processesCollect results: Collect the result from any finished DAG processors

Log statistics: Print statistics and emit

dag_processing.total_parse_time

DagFileProcessorProcess has the following steps:

Process file: The entire process must complete within dag_file_processor_timeout

The DAG files are loaded as Python module: Must complete within dagbag_import_timeout

Process modules: Find DAG objects within Python module

Return DagBag: Provide the

DagFileProcessorManagera list of the discovered DAG objects