Managing Connections¶

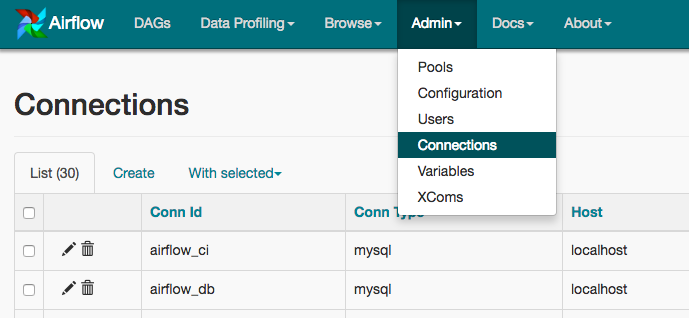

Airflow needs to know how to connect to your environment. Information

such as hostname, port, login and passwords to other systems and services is

handled in the Admin->Connections section of the UI. The pipeline code you

will author will reference the ‘conn_id’ of the Connection objects.

Connections can be created and managed using either the UI or environment variables.

See the Connenctions Concepts documentation for more information.

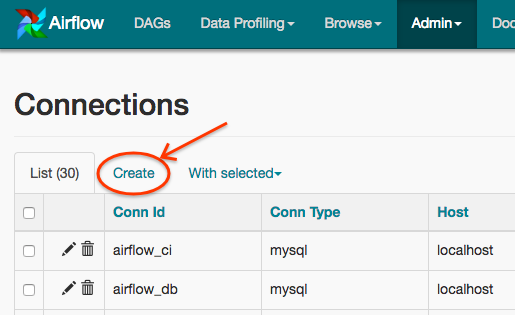

Creating a Connection with the UI¶

Open the Admin->Connections section of the UI. Click the Create link

to create a new connection.

- Fill in the

Conn Idfield with the desired connection ID. It is recommended that you use lower-case characters and separate words with underscores. - Choose the connection type with the

Conn Typefield. - Fill in the remaining fields. See Connection Types for a description of the fields belonging to the different connection types.

- Click the

Savebutton to create the connection.

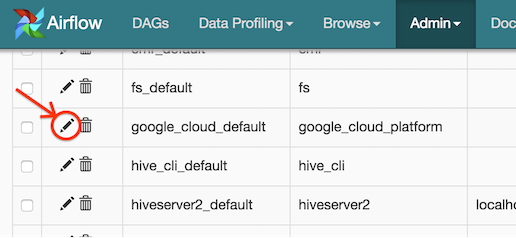

Editing a Connection with the UI¶

Open the Admin->Connections section of the UI. Click the pencil icon next

to the connection you wish to edit in the connection list.

Modify the connection properties and click the Save button to save your

changes.

Creating a Connection with Environment Variables¶

Connections in Airflow pipelines can be created using environment variables.

The environment variable needs to have a prefix of AIRFLOW_CONN_ for

Airflow with the value in a URI format to use the connection properly.

When referencing the connection in the Airflow pipeline, the conn_id

should be the name of the variable without the prefix. For example, if the

conn_id is named postgres_master the environment variable should be

named AIRFLOW_CONN_POSTGRES_MASTER (note that the environment variable

must be all uppercase). Airflow assumes the value returned from the

environment variable to be in a URI format (e.g.

postgres://user:password@localhost:5432/master or

s3://accesskey:secretkey@S3).

Connection Types¶

Google Cloud Platform¶

The Google Cloud Platform connection type enables the GCP Integrations.

Authenticating to GCP¶

There are two ways to connect to GCP using Airflow.

- Use Application Default Credentials, such as via the metadata server when running on Google Compute Engine.

- Use a service account key file (JSON format) on disk.

Default Connection IDs¶

The following connection IDs are used by default.

bigquery_default- Used by the

BigQueryHookhook. google_cloud_datastore_default- Used by the

DatastoreHookhook. google_cloud_default- Used by the

GoogleCloudBaseHook,DataFlowHook,DataProcHook,MLEngineHook, andGoogleCloudStorageHookhooks.

Configuring the Connection¶

- Project Id (required)

- The Google Cloud project ID to connect to.

- Keyfile Path

Path to a service account key file (JSON format) on disk.

Not required if using application default credentials.

- Keyfile JSON

Contents of a service account key file (JSON format) on disk. It is recommended to Secure your connections if using this method to authenticate.

Not required if using application default credentials.

- Scopes (comma separated)

A list of comma-separated Google Cloud scopes to authenticate with.

Note

Scopes are ignored when using application default credentials. See issue AIRFLOW-2522.

MySQL¶

The MySQL connect type allows to connect with MySQL database.

Configuring the Connection¶

- Host (required)

- The host to connect to.

- Schema (optional)

- Specify the schema name to be used in the database.

- Login (required)

- Specify the user name to connect.

- Password (required)

- Specify the password to connect.

- Extra (optional)

Specify the extra parameters (as json dictionary) that can be used in mysql connection. The following parameters are supported:

- charset: specify charset of the connection

- cursor: one of “sscursor”, “dictcursor, “ssdictcursor” - specifies cursor class to be used

- local_infile: controls MySQL’s LOCAL capability (permitting local data loading by clients). See MySQLdb docs for details.

- unix_socket: UNIX socket used instead of the default socket

- ssl: Dictionary of SSL parameters that control connecting using SSL (those parameters are server specific and should contain “ca”, “cert”, “key”, “capath”, “cipher” parameters. See MySQLdb docs for details. Note that in order to be useful in URL notation, this parameter might also be a string where the SSL dictionary is a string-encoded JSON dictionary.

Example “extras” field:

{ "charset": "utf8", "cursorclass": "sscursor", "local_infile": true, "unix_socket": "/var/socket", "ssl": { "cert": "/tmp/client-cert.pem", "ca": "/tmp/server-ca.pem'", "key": "/tmp/client-key.pem" } }

or

{ "charset": "utf8", "cursorclass": "sscursor", "local_infile": true, "unix_socket": "/var/socket", "ssl": "{\"cert\": \"/tmp/client-cert.pem\", \"ca\": \"/tmp/server-ca.pem\", \"key\": \"/tmp/client-key.pem\"}" }

When specifying the connection as URI (in AIRFLOW_CONN_* variable) you should specify it following the standard syntax of DB connections, where extras as passed as parameters of the URI (note that all components of the URI should be URL-encoded).

For example:

mysql://mysql_user:XXXXXXXXXXXX@1.1.1.1:3306/mysqldb?ssl=%7B%22cert%22%3A+%22%2Ftmp%2Fclient-cert.pem%22%2C+%22ca%22%3A+%22%2Ftmp%2Fserver-ca.pem%22%2C+%22key%22%3A+%22%2Ftmp%2Fclient-key.pem%22%7DNote

If encounter UnicodeDecodeError while working with MySQL connection check the charset defined is matched to the database charset.